1. Overview

Goals

Firebase ML enables you to deploy your model over-the-air. This allows you to keep the app size small and only download the ML model when needed, experiment with multiple models, or update your ML model without having to republish the entire app.

In this codelab you will convert an iOS app using a static TFLite model into an app using a model dynamically served from Firebase. You will learn how to:

- Deploy TFLite models to Firebase ML and access them from your app

- Log model-related metrics with Analytics

- Select which model is loaded through Remote Config

- A/B test different models

Prerequisites

Before starting this codelab make sure you have installed:

- Xcode 11 (or higher)

- CocoaPods 1.9.1 (or higher)

2. Create Firebase console project

Add Firebase to the project

- Go to the Firebase console.

- Select Create New Project and name your project "Firebase ML iOS Codelab".

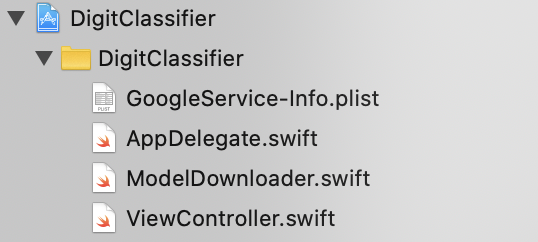

3. Get the Sample Project

Download the Code

Begin by cloning the sample project and running pod update in the project directory:

git clone https://github.com/FirebaseExtended/codelab-digitclassifier-ios.git cd codelab-digitclassifier-ios pod install --repo-update

If you don't have git installed, you can also download the sample project from its GitHub page or by clicking on this link. Once you've downloaded the project, run it in Xcode and play around with the digit classifier to get a feel for how it works.

Set up Firebase

Follow the documentation to create a new Firebase project. Once you've got your project, download your project's GoogleService-Info.plist file from Firebase console and drag it to the root of the Xcode project.

Add Firebase to your Podfile and run pod install.

pod 'FirebaseMLModelDownloader', '9.3.0-beta'

In your AppDelegate's didFinishLaunchingWithOptions method, import Firebase at the top of the file

import FirebaseCore

And add a call to configure Firebase.

FirebaseApp.configure()

Run the project again to make sure the app is configured correctly and does not crash on launch.

4. Deploy a model to Firebase ML

Deploying a model to Firebase ML is useful for two main reasons:

- We can keep the app install size small and only download the model if needed

- The model can be updated regularly and with a different release cycle than the entire app

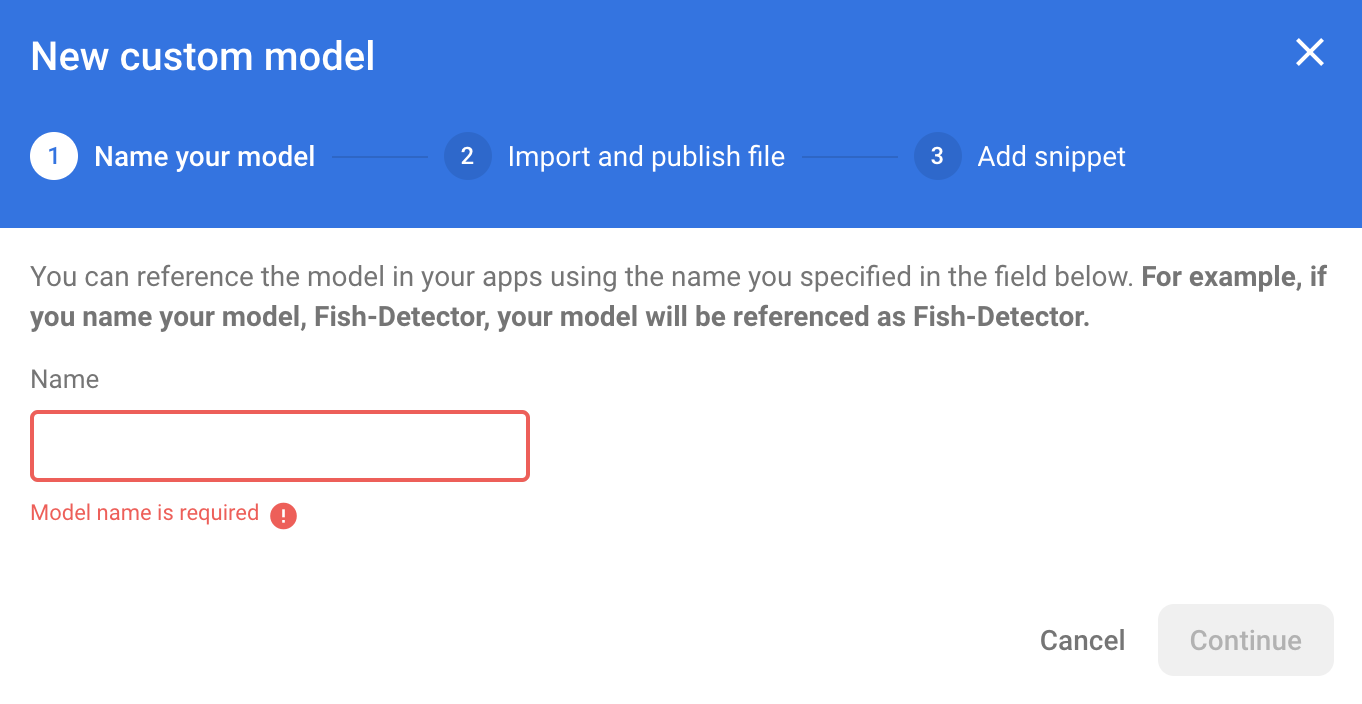

Before we can replace the static model in our app with a dynamically downloaded model from Firebase, we need to deploy it to Firebase ML. The model can be deployed either via the console, or programmatically, using the Firebase Admin SDK. In this step we will deploy via the console.

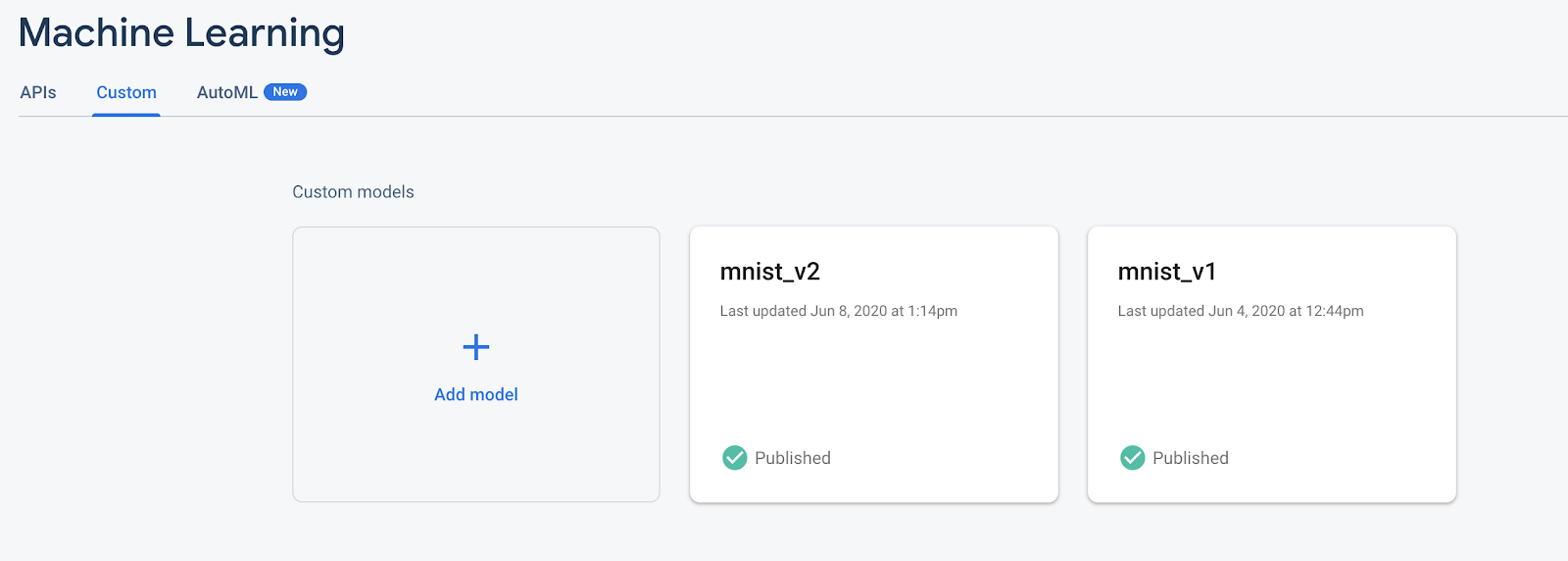

To keep things simple, we'll use the TensorFlow Lite model that's already in our app. First, open Firebase and click on Machine Learning in the left navigation panel. Then navigate to "Custom" and click on the "Add Model" button.

When prompted, give the model a descriptive name like mnist_v1 and upload the file from the codelab project directory.

5. Download model from Firebase ML

Choosing when to download the remote model from Firebase into your app can be tricky since TFLite models can grow relatively large. Ideally we want to avoid loading the model immediately when the app launches, since if our model is used for only one feature and the user never uses that feature, we'll have downloaded a significant amount of data for no reason. We can also set download options such as only fetching models when connected to wifi. If you want to ensure that the model is available even without a network connection, you should also bundle the model as part of the app as a backup.

For the sake of simplicity, we'll remove the default bundled model and always download a model from Firebase when the app starts. This way when running digit recognition you can be sure that the inference is running with the model provided from Firebase.

At the top of ModelLoader.swift, import the Firebase module.

import FirebaseCore import FirebaseMLModelDownloader

Then implement the following method.

static func downloadModel(named name: String,

completion: @escaping (CustomModel?, DownloadError?) -> Void) {

guard FirebaseApp.app() != nil else {

completion(nil, .firebaseNotInitialized)

return

}

guard success == nil && failure == nil else {

completion(nil, .downloadInProgress)

return

}

let conditions = ModelDownloadConditions(allowsCellularAccess: false)

ModelDownloader.modelDownloader().getModel(name: name, downloadType: .localModelUpdateInBackground, conditions: conditions) { result in

switch (result) {

case .success(let customModel):

// Download complete.

// The CustomModel object contains the local path of the model file,

// which you can use to instantiate a TensorFlow Lite classifier.

return completion(customModel, nil)

case .failure(let error):

// Download was unsuccessful. Notify error message.

completion(nil, .downloadFailed(underlyingError: error))

}

}

}

In ViewController.swift's viewDidLoad, replace the DigitClassifier initialization call with our new model download method.

// Download the model from Firebase

print("Fetching model...")

ModelLoader.downloadModel(named: "mnist_v1") { (customModel, error) in

guard let customModel = customModel else {

if let error = error {

print(error)

}

return

}

print("Model download complete")

// Initialize a DigitClassifier instance

DigitClassifier.newInstance(modelPath: customModel.path) { result in

switch result {

case let .success(classifier):

self.classifier = classifier

case .error(_):

self.resultLabel.text = "Failed to initialize."

}

}

}

Re-run your app. After a few seconds, you should see a log in Xcode indicating the remote model has successfully downloaded. Try drawing a digit and confirm the behavior of the app has not changed.

6. Track user feedback and conversion to measure model accuracy

We will measure accuracy of the model by tracking user feedback on model predictions. If a user clicks "yes", it will indicate that the prediction was an accurate one.

We can log an Analytics event to track the accuracy of our model. First, we must add Analytics to the Podfile before it can be used in the project:

pod 'FirebaseAnalytics'

Then in ViewController.swift import Firebase at the top of the file

import FirebaseAnalytics

And add the following line of code in the correctButtonPressed method.

Analytics.logEvent("correct_inference", parameters: nil)

Run the app again and draw a digit. Press the "Yes" button a couple of times to send feedback that the inference was accurate.

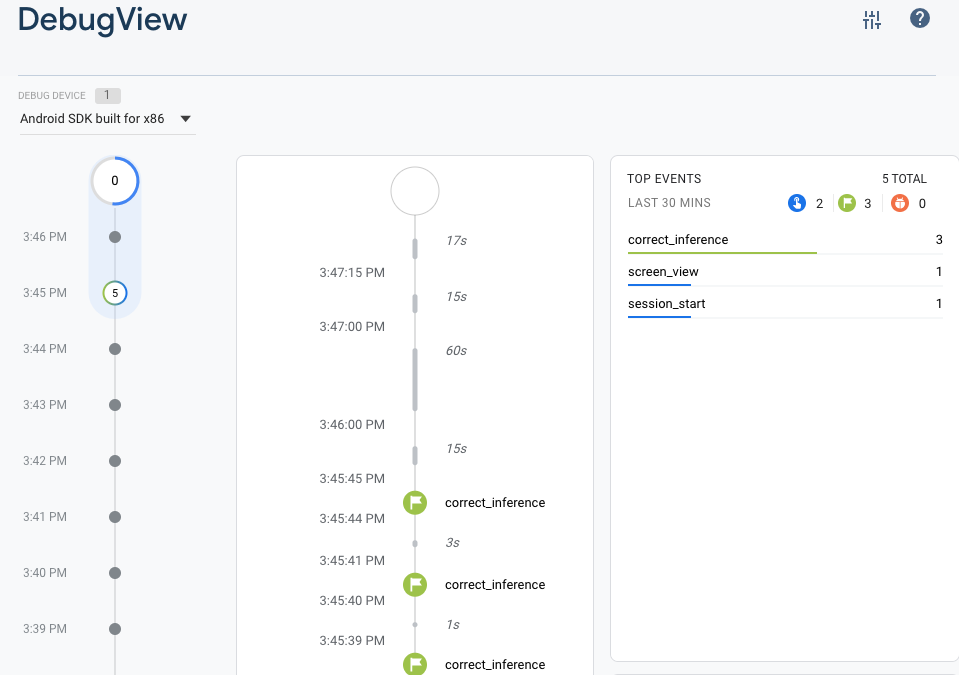

Debug analytics

Generally, events logged by your app are batched together over the period of approximately one hour and uploaded together. This approach conserves the battery on end users' devices and reduces network data usage. However, for the purposes of validating your analytics implementation (and, in order to view your analytics in the DebugView report), you can enable Debug mode on your development device to upload events with a minimal delay.

To enable Analytics Debug mode on your development device, specify the following command line argument in Xcode:

-FIRDebugEnabled

Run the app again and draw a digit. Press the "Yes" button a couple of times to send feedback that the inference was accurate. Now you can view the log events in near real time via the debug view in the Firebase console. Click on Analytics > DebugView from the left navigation bar.

7. Track inference time with Firebase Performance

When testing your model, performance metrics made on development devices aren't sufficient to capture how the model will perform in your users' hands, since it's difficult to tell what hardware users will be running your app on. Fortunately, you can measure your model's performance on users' devices with Firebase Performance to get a better picture of your model's performance.

To measure the time it takes to run inference, first import Firebase in DigitClassifier.swift:

import FirebasePerformance

Then start a performance trace in the classify method and stop the trace when the inference is complete. Make sure you add the following lines of code inside the DispatchQueue.global.async closure and not directly below the method declaration.

let inferenceTrace = Performance.startTrace(name: "tflite inference")

defer {

inferenceTrace?.stop()

}

If you're curious, you can enable debug logging via the instructions here to confirm your performance traces are being logged. After a while, the performance traces will be visible in Firebase Console as well.

8. Deploy a second model to Firebase ML

When coming up with a new version of your model, such as one with a better model architecture or one trained on a larger or updated dataset, we may feel tempted to replace our current model with the new version. However, a model performing well in testing does not necessarily perform equally well in production. Therefore, let's do A/B testing in production to compare our original model and the new one.

Enable Firebase Model Management API

In this step, we will enable the Firebase Model Management API to deploy a new version of our TensorFlow Lite model using Python code.

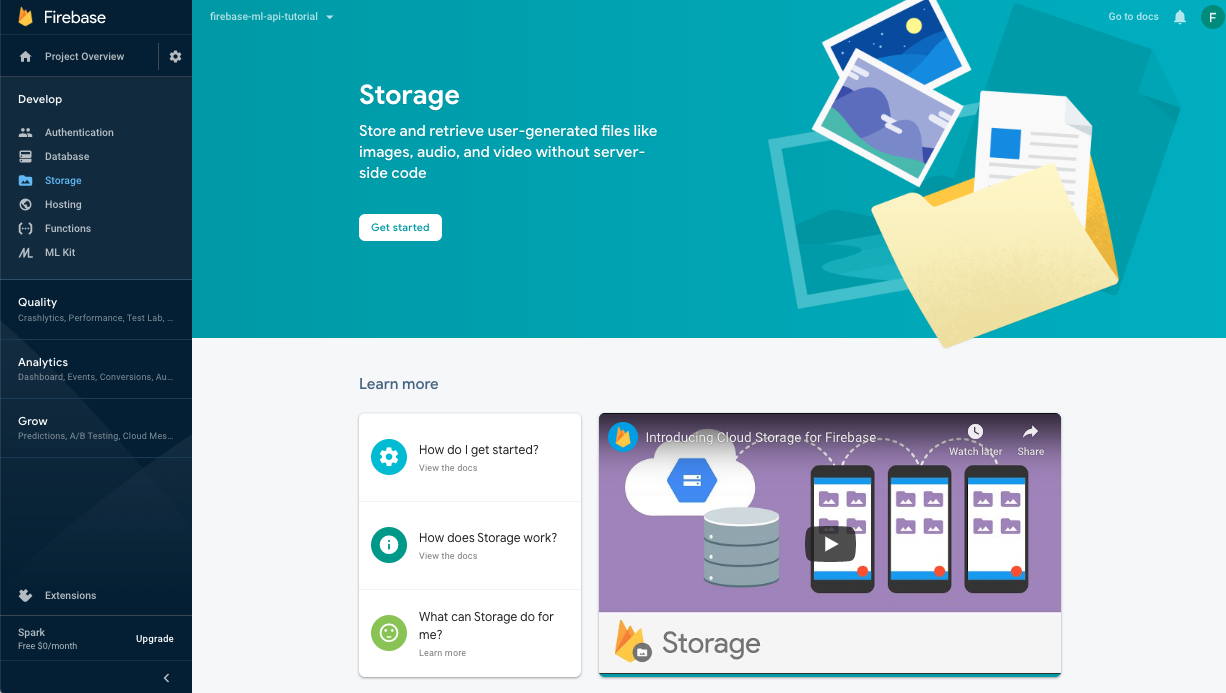

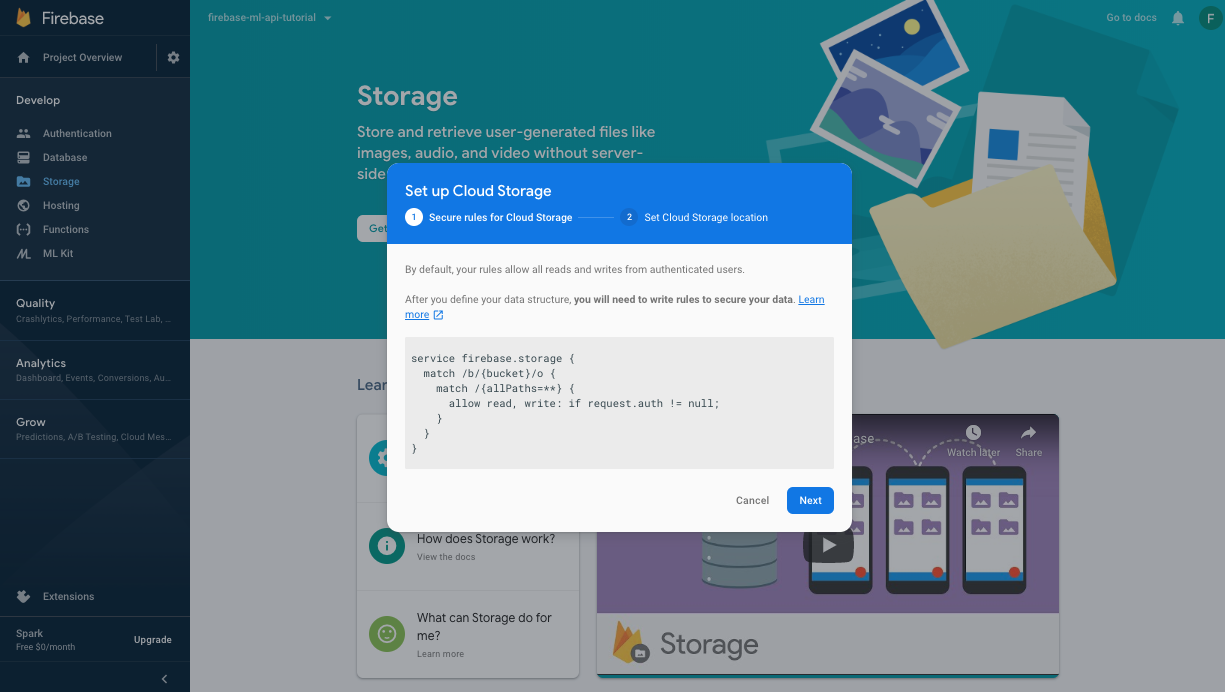

Create a bucket to store your ML models

In your Firebase Console, go to Storage and click Get started.

Follow the dialogue to get your bucket set up.

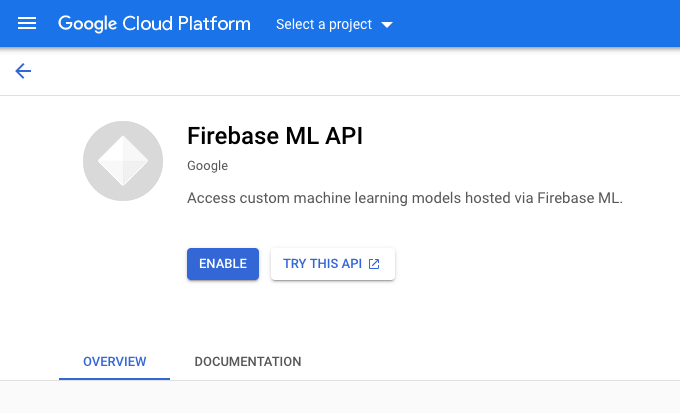

Enable Firebase ML API

Go to Firebase ML API page on Google Cloud Console and click Enable.

Select the Digit Classifier app when asked.

Select the Digit Classifier app when asked.

Now we will train a new version of the model by using a larger dataset, and we will then deploy it programmatically directly from the training notebook using the Firebase Admin SDK.

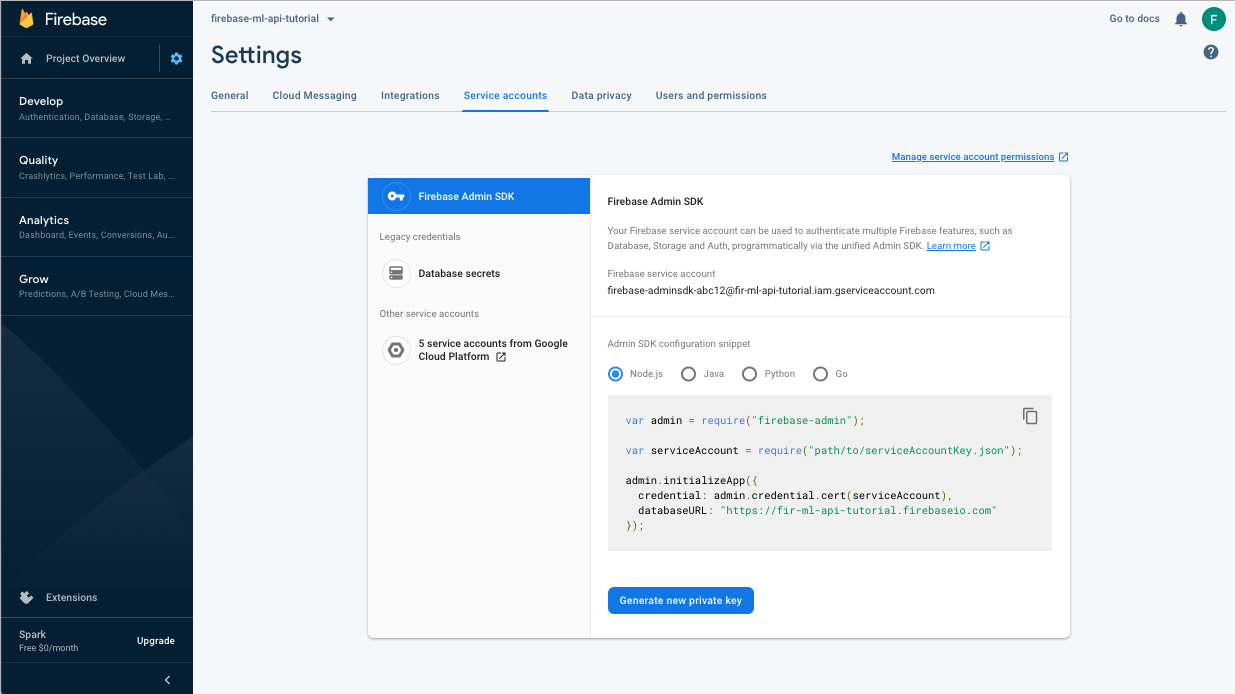

Download the private key for service account

Before we can use the Firebase Admin SDK, we'll need to create a service account. Open the Service Accounts panel of Firebase console by clicking this link and click on the button to create a new service account for the Firebase Admin SDK. When prompted, click the Generate New Private Key button. We'll use the service account key for authenticating our requests from the colab notebook.

Now we can train and deploy the new model.

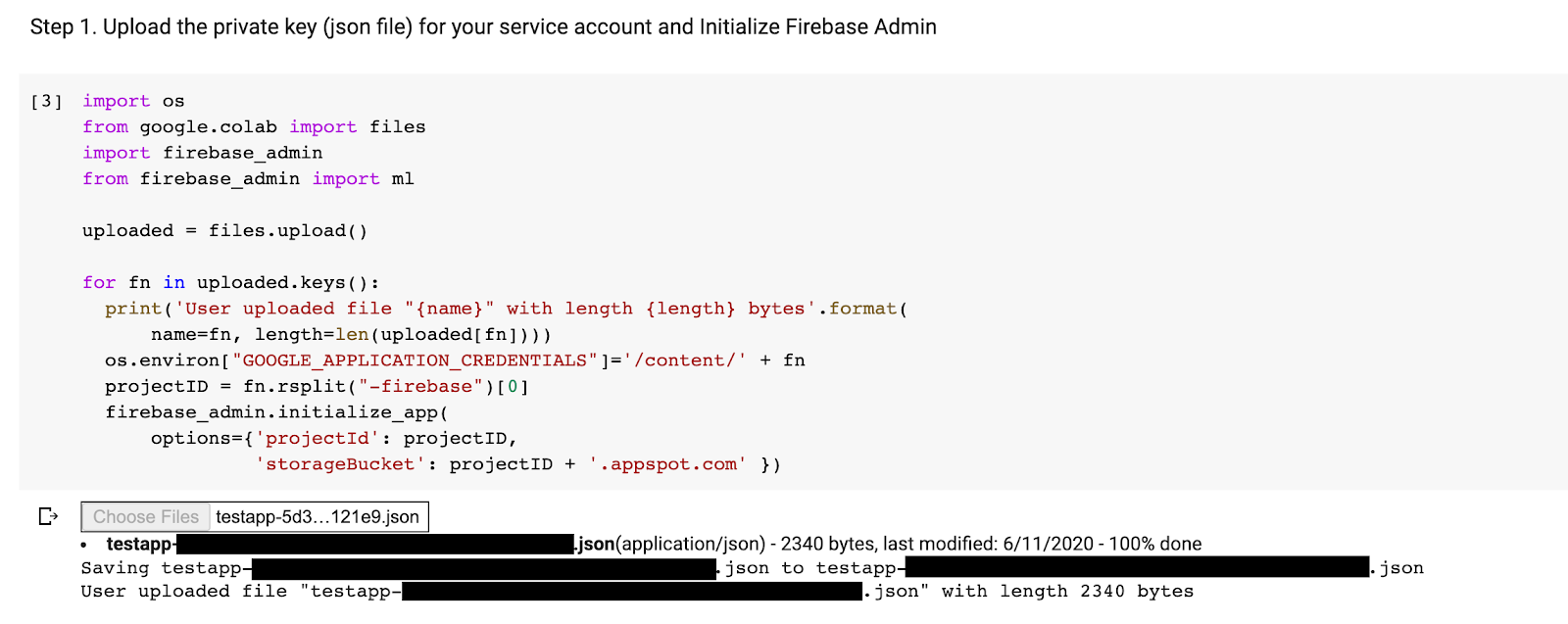

- Open this colab notebook and make a copy of it under your own Drive.

- Run the first cell "Train an improved TensorFlow Lite model" by clicking on the play button to the left of it. This will train a new model and may take some time.

- Running the second cell will create a file upload prompt. Upload the json file you downloaded from Firebase Console when creating your service account.

- Run the last two cells.

After running the colab notebook, you should see a second model in Firebase console. Make sure the second model is named mnist_v2.

9. Select a model via Remote Config

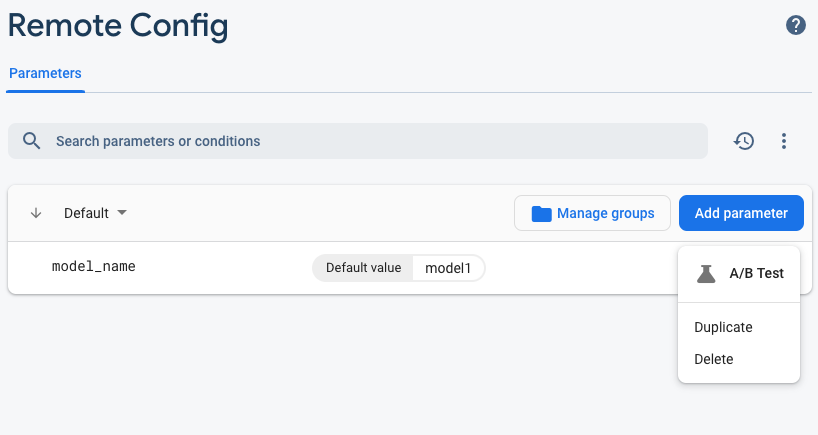

Now that we have two separate models, we'll add a parameter for selecting which model to download at runtime. The value of the parameter the client receives will determine which model the client downloads. First, open up the Firebase console and click on the Remote Config button in the left nav menu. Then, click on the "Add Parameter" button.

Name the new parameter model_name and give it a default value of mnist_v1. Click Publish Changes to apply the updates. By putting the name of the model in the remote config parameter, we can test multiple models without adding a new parameter for every model we want to test.

After adding the parameter, you should see it in Console:

In our code, we'll need to add a check when loading the remote model. When we receive the parameter from Remote Config, we'll fetch the remote model with the corresponding name; otherwise we'll attempt to load mnist_v1. Before we can use Remote Config, we have to add it to our project by specifying it as a dependency in the Podfile:

pod 'FirebaseRemoteConfig'

Run pod install and re-open the Xcode project. In ModelLoader.swift, implement the fetchParameterizedModel method.

static func fetchParameterizedModel(completion: @escaping (CustomModel?, DownloadError?) -> Void) {

RemoteConfig.remoteConfig().fetchAndActivate { (status, error) in

DispatchQueue.main.async {

if let error = error {

let compositeError = DownloadError.downloadFailed(underlyingError: error)

completion(nil, compositeError)

return

}

let modelName: String

if let name = RemoteConfig.remoteConfig().configValue(forKey: "model_name").stringValue {

modelName = name

} else {

let defaultName = "mnist_v1"

print("Unable to fetch model name from config, falling back to default \(defaultName)")

modelName = defaultName

}

downloadModel(named: modelName, completion: completion)

}

}

}

Finally, in ViewController.swift, replace the downloadModel call with the new method we just implemented.

// Download the model from Firebase

print("Fetching model...")

ModelLoader.fetchParameterizedModel { (customModel, error) in

guard let customModel = customModel else {

if let error = error {

print(error)

}

return

}

print("Model download complete")

// Initialize a DigitClassifier instance

DigitClassifier.newInstance(modelPath: customModel.path) { result in

switch result {

case let .success(classifier):

self.classifier = classifier

case .error(_):

self.resultLabel.text = "Failed to initialize."

}

}

}

Re-run the app and make sure it still loads the model correctly.

10. A/B Test the two models

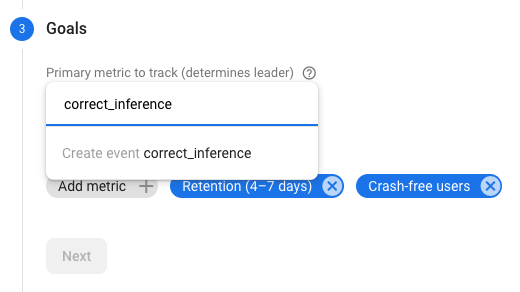

Finally, we can use Firebase's built-in A/B Testing behavior to see which of our two models is performing better. Go to Analytics -> Events in the Firebase console. If the correct_inference event is showing, mark it as a "Conversion event", if not, you can go to Analytics -> Conversion Events and click "Create a New Conversion Event" and put down correct_inference.

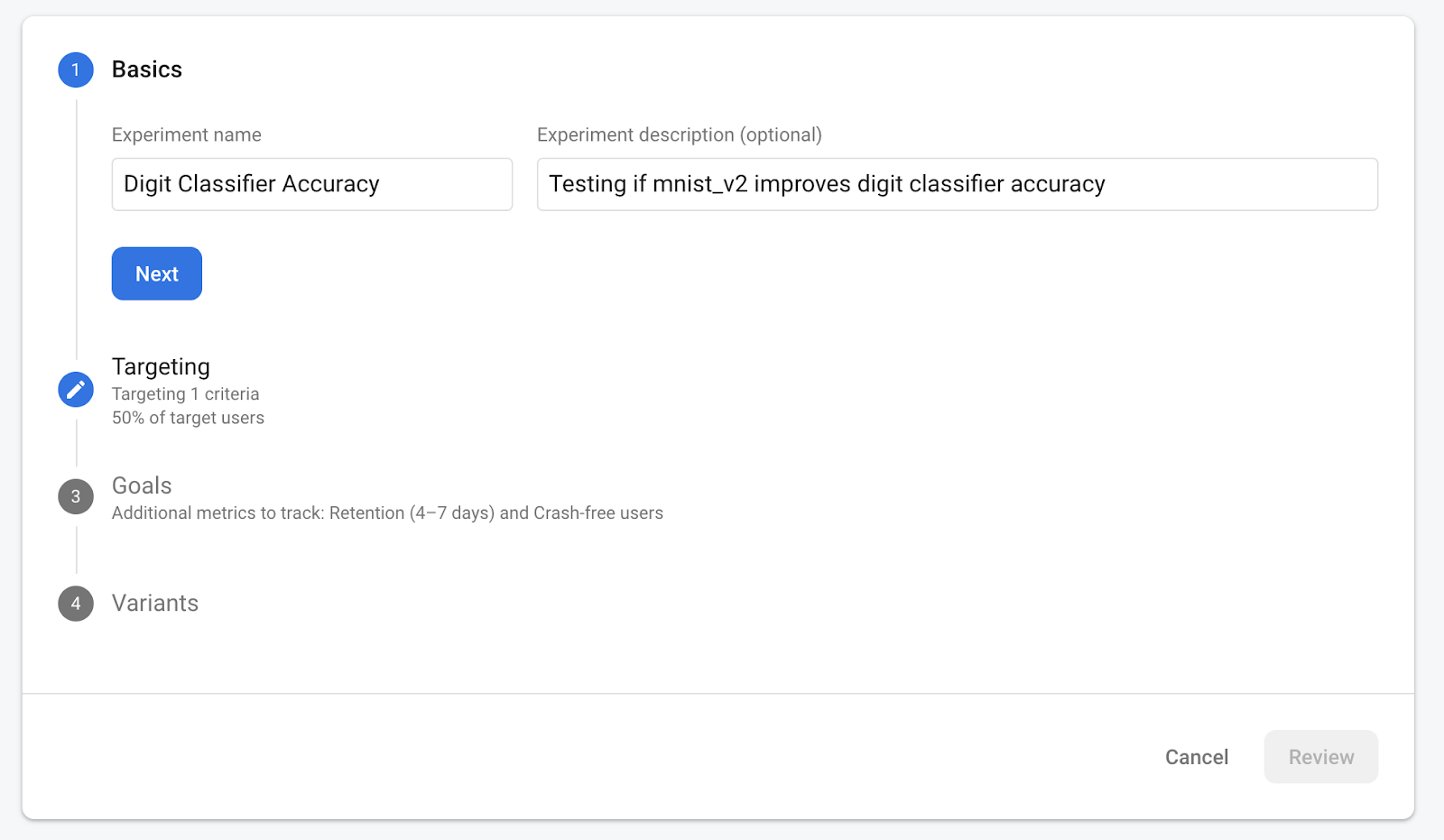

Now go to "Remote Config in the Firebase console, select the "A/B test" button from the more options menu on the "model_name" parameter we just added.

In the menu that follows, accept the default name.

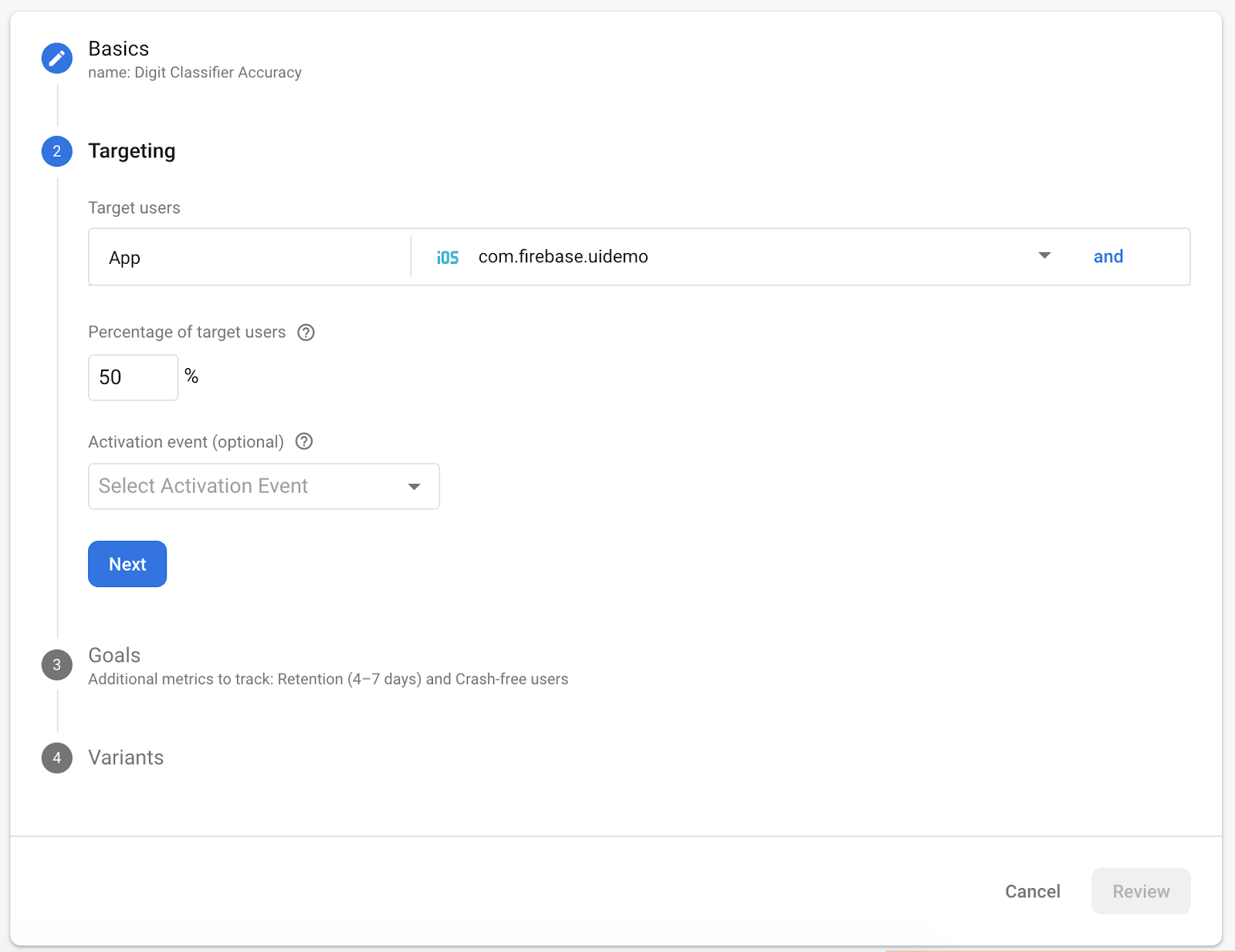

Select your app on the dropdown and change the targeting criteria to 50% of active users.

If you were able to set the correct_inference event as a conversion earlier, use this event as the primary metric to track. Otherwise, if you don't want to wait for the event to show up in Analytics, you can add correct_inference manually.

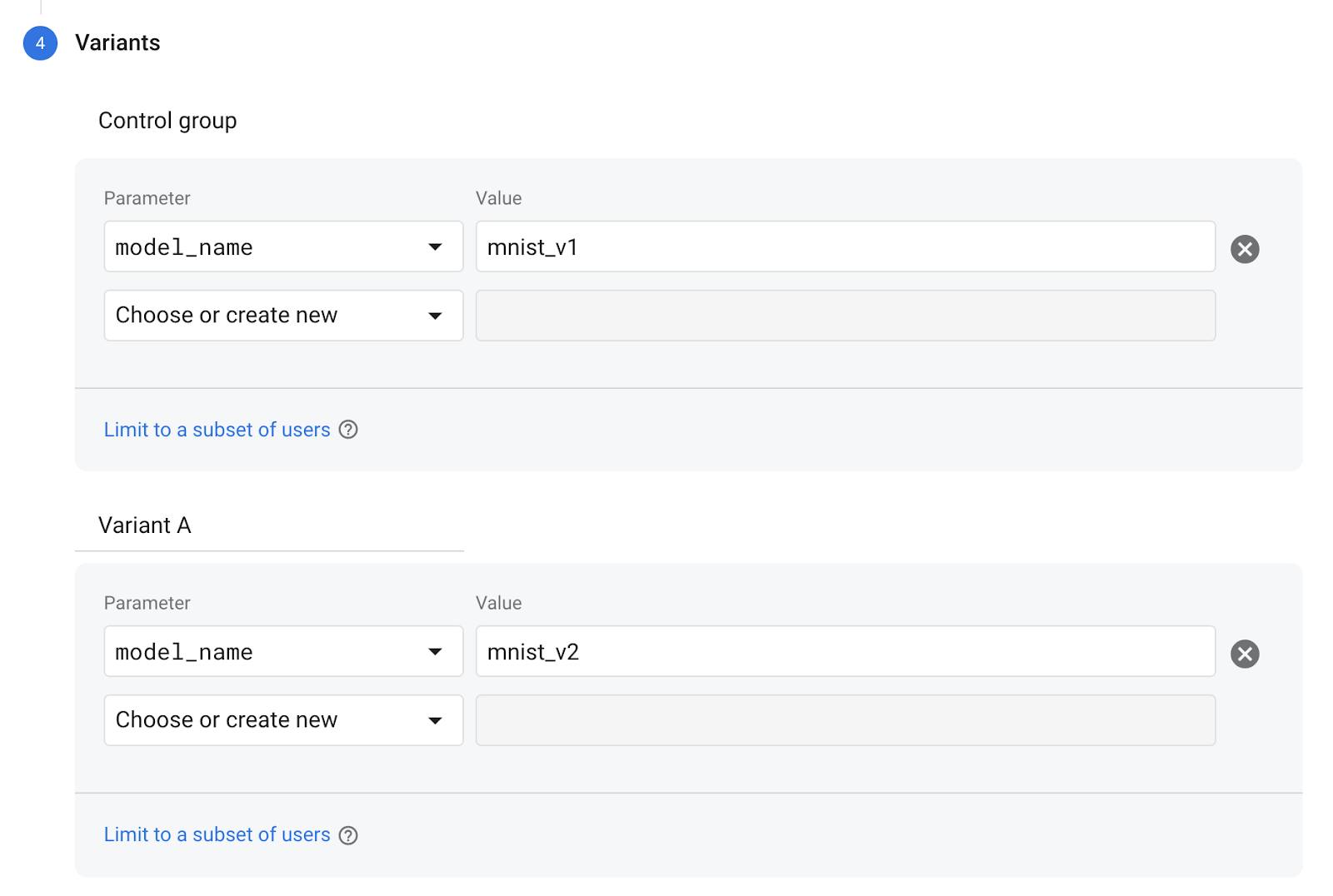

Finally, on the Variants screen, set your control group variant to use mnist_v1 and your Variant A group to use mnist_v2.

Click the Review button in the bottom right corner.

Congratulations, you've successfully created an A/B test for your two separate models! The A/B test is currently in a draft state and can be started at any time by clicking the "Start Experiment" button.

For a closer look at A/B testing, check out the A/B Testing documentation.

11. Conclusion

In this codelab, you learned how to replace a statically-bundled tflite asset in your app with a dynamically loaded TFLite model from Firebase. To learn more about TFLite and Firebase, take a look at other TFLite samples and the Firebase getting started guides.

- Firebase Machine Learning documentation

- TensorFlow Lite documentation

- A/B Testing models with Firebase